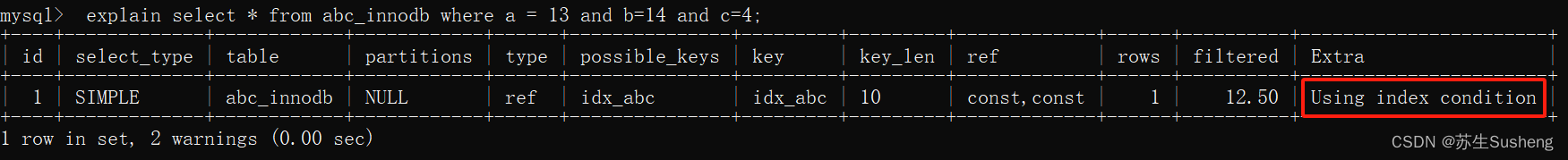

纯检测如下:

YOLOv5-Openvino和ONNXRuntime推理【CPU】

YOLOv6-Openvino和ONNXRuntime推理【CPU】

YOLOv8-Openvino和ONNXRuntime推理【CPU】

YOLOv9-Openvino和ONNXRuntime推理【CPU】

注:YOLOv8和YOLOv9代码内容基本一致!

全部代码Github:https://github.com/Bigtuo/YOLOv8_Openvino

1 环境:

CPU:i5-12500

Python:3.8.18

VS2019

注:Bytetrack中的lap和cython_bbox库需要编译安装,直接安装报错,故下载VS2019。

2 安装Openvino和ONNXRuntime

2.1 Openvino简介

Openvino是由Intel开发的专门用于优化和部署人工智能推理的半开源的工具包,主要用于对深度推理做优化。

Openvino内部集成了Opencv、TensorFlow模块,除此之外它还具有强大的Plugin开发框架,允许开发者在Openvino之上对推理过程做优化。

Openvino整体框架为:Openvino前端→ Plugin中间层→ Backend后端

Openvino的优点在于它屏蔽了后端接口,提供了统一操作的前端API,开发者可以无需关心后端的实现,例如后端可以是TensorFlow、Keras、ARM-NN,通过Plugin提供给前端接口调用,也就意味着一套代码在Openvino之上可以运行在多个推理引擎之上,Openvino像是类似聚合一样的开发包。

2.2 ONNXRuntime简介

ONNXRuntime是微软推出的一款推理框架,用户可以非常便利的用其运行一个onnx模型。ONNXRuntime支持多种运行后端包括CPU,GPU,TensorRT,DML等。可以说ONNXRuntime是对ONNX模型最原生的支持。

虽然大家用ONNX时更多的是作为一个中间表示,从pytorch转到onnx后直接喂到TensorRT或MNN等各种后端框架,但这并不能否认ONNXRuntime是一款非常优秀的推理框架。而且由于其自身只包含推理功能(最新的ONNXRuntime甚至已经可以训练),通过阅读其源码可以解深度学习框架的一些核心功能原理(op注册,内存管理,运行逻辑等)

总体来看,整个ONNXRuntime的运行可以分为三个阶段,Session构造,模型加载与初始化和运行。和其他所有主流框架相同,ONNXRuntime最常用的语言是python,而实际负责执行框架运行的则是C++。

2.3 安装

pip install openvino -i https://pypi.tuna.tsinghua.edu.cn/simple

pip install onnxruntime -i https://pypi.tuna.tsinghua.edu.cn/simple

YOLOv8ByteTrack_32">3 YOLOv8和ByteTrack原理

3.1 安装lap和cython_bbox

1. lap

cd lap-0.4.0

python setup.py install

2. cython_bbox【上传的文件可以直接进行第4步】

pip install cython -i https://pypi.tuna.tsinghua.edu.cn/simple【需先安装】

cd cython_bbox-0.1.3

(1)下载cython-bbox

(2)解压文件

(3)【已修改】在解压后的目录中,找到steup.py 文件,把extra_compile_args=[’-Wno-cpp’],修改为extra_compile_args = {‘gcc’: [’/Qstd=c99’]}

(4)在解压文件目录下运行

python setup.py build_ext install

YOLOv8ByteTrack_56">4 YOLOv8+ByteTrack主代码

下面代码整个处理过程主要包括:预处理—>推理—>后处理—>是/否跟踪—>画图。

假设图像resize为640×640,

前处理输出结果维度:(1, 3, 640, 640);

推理输出结果维度:(1, 84, 8400),其中84表示4个box坐标信息+80个类别概率,8400表示80×80+40×40+20×20;

后处理输出结果维度:(5, 6),其中第一个5表示图bus.jpg检出5个目标,第二个维度6表示(x1, y1, x2, y2, conf, cls);

跟踪输入维度:(-1, 5),其中第二个维度5表示(x1, y1, x2, y2, conf);

跟踪输出维度:(-1, 6),其中第二个维度6表示(x1, y1, x2, y2, conf, ids)。

注:YOLOv9换模型文件可直接使用!

import argparse

import time

import cv2

import numpy as np

from openvino.runtime import Core # pip install openvino -i https://pypi.tuna.tsinghua.edu.cn/simple

import onnxruntime as ort # 使用onnxruntime推理用上,pip install onnxruntime,默认安装CPU

import copy

from bytetrack.byte_tracker import BYTETracker

# COCO默认的80类

CLASSES = ['person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light',

'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

'elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee',

'skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard',

'tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple', 'sandwich',

'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch', 'potted plant', 'bed',

'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone', 'microwave', 'oven',

'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors', 'teddy bear', 'hair drier', 'toothbrush']

class OpenvinoInference(object):

def __init__(self, onnx_path):

self.onnx_path = onnx_path

ie = Core()

self.model_onnx = ie.read_model(model=self.onnx_path)

self.compiled_model_onnx = ie.compile_model(model=self.model_onnx, device_name="CPU")

self.output_layer_onnx = self.compiled_model_onnx.output(0)

def predirts(self, datas):

predict_data = self.compiled_model_onnx([datas])[self.output_layer_onnx]

return predict_data

class YOLOv8:

"""YOLOv8 object detection model class for handling inference and visualization."""

def __init__(self, onnx_model, imgsz=(640, 640), infer_tool='openvino'):

"""

Initialization.

Args:

onnx_model (str): Path to the ONNX model.

"""

self.infer_tool = infer_tool

if self.infer_tool == 'openvino':

# 构建openvino推理引擎

self.openvino = OpenvinoInference(onnx_model)

self.ndtype = np.single

else:

# 构建onnxruntime推理引擎

self.ort_session = ort.InferenceSession(onnx_model,

providers=['CUDAExecutionProvider', 'CPUExecutionProvider']

if ort.get_device() == 'GPU' else ['CPUExecutionProvider'])

# Numpy dtype: support both FP32 and FP16 onnx model

self.ndtype = np.half if self.ort_session.get_inputs()[0].type == 'tensor(float16)' else np.single

self.classes = CLASSES # 加载模型类别

self.model_height, self.model_width = imgsz[0], imgsz[1] # 图像resize大小

self.color_palette = np.random.uniform(0, 255, size=(len(self.classes), 3)) # 为每个类别生成调色板

def __call__(self, im0, conf_threshold=0.4, iou_threshold=0.45):

"""

The whole pipeline: pre-process -> inference -> post-process.

Args:

im0 (Numpy.ndarray): original input image.

conf_threshold (float): confidence threshold for filtering predictions.

iou_threshold (float): iou threshold for NMS.

Returns:

boxes (List): list of bounding boxes.

"""

# 前处理Pre-process

t1 = time.time()

im, ratio, (pad_w, pad_h) = self.preprocess(im0)

print('预处理时间:{:.3f}s'.format(time.time() - t1))

# 推理 inference

t2 = time.time()

if self.infer_tool == 'openvino':

preds = self.openvino.predirts(im)

else:

preds = self.ort_session.run(None, {self.ort_session.get_inputs()[0].name: im})[0]

print('推理时间:{:.2f}s'.format(time.time() - t2))

# 后处理Post-process

t3 = time.time()

boxes = self.postprocess(preds,

im0=im0,

ratio=ratio,

pad_w=pad_w,

pad_h=pad_h,

conf_threshold=conf_threshold,

iou_threshold=iou_threshold,

)

print('后处理时间:{:.3f}s'.format(time.time() - t3))

return boxes

# 前处理,包括:resize, pad, HWC to CHW,BGR to RGB,归一化,增加维度CHW -> BCHW

def preprocess(self, img):

"""

Pre-processes the input image.

Args:

img (Numpy.ndarray): image about to be processed.

Returns:

img_process (Numpy.ndarray): image preprocessed for inference.

ratio (tuple): width, height ratios in letterbox.

pad_w (float): width padding in letterbox.

pad_h (float): height padding in letterbox.

"""

# Resize and pad input image using letterbox() (Borrowed from Ultralytics)

shape = img.shape[:2] # original image shape

new_shape = (self.model_height, self.model_width)

r = min(new_shape[0] / shape[0], new_shape[1] / shape[1])

ratio = r, r

new_unpad = int(round(shape[1] * r)), int(round(shape[0] * r))

pad_w, pad_h = (new_shape[1] - new_unpad[0]) / 2, (new_shape[0] - new_unpad[1]) / 2 # wh padding

if shape[::-1] != new_unpad: # resize

img = cv2.resize(img, new_unpad, interpolation=cv2.INTER_LINEAR)

top, bottom = int(round(pad_h - 0.1)), int(round(pad_h + 0.1))

left, right = int(round(pad_w - 0.1)), int(round(pad_w + 0.1))

img = cv2.copyMakeBorder(img, top, bottom, left, right, cv2.BORDER_CONSTANT, value=(114, 114, 114)) # 填充

# Transforms: HWC to CHW -> BGR to RGB -> div(255) -> contiguous -> add axis(optional)

img = np.ascontiguousarray(np.einsum('HWC->CHW', img)[::-1], dtype=self.ndtype) / 255.0

img_process = img[None] if len(img.shape) == 3 else img

return img_process, ratio, (pad_w, pad_h)

# 后处理,包括:阈值过滤与NMS

def postprocess(self, preds, im0, ratio, pad_w, pad_h, conf_threshold, iou_threshold):

"""

Post-process the prediction.

Args:

preds (Numpy.ndarray): predictions come from ort.session.run().

im0 (Numpy.ndarray): [h, w, c] original input image.

ratio (tuple): width, height ratios in letterbox.

pad_w (float): width padding in letterbox.

pad_h (float): height padding in letterbox.

conf_threshold (float): conf threshold.

iou_threshold (float): iou threshold.

Returns:

boxes (List): list of bounding boxes.

"""

x = preds # outputs: predictions (1, 84, 8400)

# Transpose the first output: (Batch_size, xywh_conf_cls, Num_anchors) -> (Batch_size, Num_anchors, xywh_conf_cls)

x = np.einsum('bcn->bnc', x) # (1, 8400, 84)

# Predictions filtering by conf-threshold

x = x[np.amax(x[..., 4:], axis=-1) > conf_threshold]

# Create a new matrix which merge these(box, score, cls) into one

# For more details about `numpy.c_()`: https://numpy.org/doc/1.26/reference/generated/numpy.c_.html

x = np.c_[x[..., :4], np.amax(x[..., 4:], axis=-1), np.argmax(x[..., 4:], axis=-1)]

# NMS filtering

# 经过NMS后的值, np.array([[x, y, w, h, conf, cls], ...]), shape=(-1, 4 + 1 + 1)

x = x[cv2.dnn.NMSBoxes(x[:, :4], x[:, 4], conf_threshold, iou_threshold)]

# 重新缩放边界框,为画图做准备

if len(x) > 0:

# Bounding boxes format change: cxcywh -> xyxy

x[..., [0, 1]] -= x[..., [2, 3]] / 2

x[..., [2, 3]] += x[..., [0, 1]]

# Rescales bounding boxes from model shape(model_height, model_width) to the shape of original image

x[..., :4] -= [pad_w, pad_h, pad_w, pad_h]

x[..., :4] /= min(ratio)

# Bounding boxes boundary clamp

x[..., [0, 2]] = x[:, [0, 2]].clip(0, im0.shape[1])

x[..., [1, 3]] = x[:, [1, 3]].clip(0, im0.shape[0])

return x[..., :6] # boxes

else:

return []

# 绘框

def draw_and_visualize(self, im, bboxes, video_writer, vis=False, save=False, is_track=False):

"""

Draw and visualize results.

Args:

im (np.ndarray): original image, shape [h, w, c].

bboxes (numpy.ndarray): [n, 6], n is number of bboxes.

vis (bool): imshow using OpenCV.

save (bool): save image annotated.

Returns:

None

"""

# Draw rectangles

if not is_track:

for (*box, conf, cls_) in bboxes:

# draw bbox rectangle

cv2.rectangle(im, (int(box[0]), int(box[1])), (int(box[2]), int(box[3])),

self.color_palette[int(cls_)], 1, cv2.LINE_AA)

cv2.putText(im, f'{self.classes[int(cls_)]}: {conf:.3f}', (int(box[0]), int(box[1] - 9)),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, self.color_palette[int(cls_)], 2, cv2.LINE_AA)

else:

for (*box, conf, id_) in bboxes:

# draw bbox rectangle

cv2.rectangle(im, (int(box[0]), int(box[1])), (int(box[2]), int(box[3])),

(0, 0, 255), 1, cv2.LINE_AA)

cv2.putText(im, f'{id_}: {conf:.3f}', (int(box[0]), int(box[1] - 9)),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2, cv2.LINE_AA)

# Show image

if vis:

cv2.imshow('demo', im)

cv2.waitKey(1)

# Save video

if save:

video_writer.write(im)

class ByteTrackerONNX(object):

def __init__(self, args):

self.args = args

self.tracker = BYTETracker(args, frame_rate=30)

def _tracker_update(self, dets, image):

online_targets = []

if dets is not None:

online_targets = self.tracker.update(

dets[:, :-1],

[image.shape[0], image.shape[1]],

[image.shape[0], image.shape[1]],

)

online_tlwhs = []

online_ids = []

online_scores = []

for online_target in online_targets:

tlwh = online_target.tlwh

track_id = online_target.track_id

vertical = tlwh[2] / tlwh[3] > 1.6

if tlwh[2] * tlwh[3] > self.args.min_box_area and not vertical:

online_tlwhs.append(tlwh)

online_ids.append(track_id)

online_scores.append(online_target.score)

return online_tlwhs, online_ids, online_scores

def inference(self, image, dets):

"""

Args: dets: 检测结果, [x1, y1, x2, y2, score]

Returns: np.array([[x1, y1, x2, y2, conf, ids], ...])

"""

bboxes, ids, scores = self._tracker_update(dets, image)

if len(bboxes) == 0:

return []

# Bounding boxes format change: tlwh -> xyxy

bboxes = np.array(bboxes)

bboxes[..., [2, 3]] += bboxes[..., [0, 1]]

bboxes = np.c_[bboxes, np.array(scores), np.array(ids)]

return bboxes

if __name__ == '__main__':

# Create an argument parser to handle command-line arguments

parser = argparse.ArgumentParser()

parser.add_argument('--model', type=str, default='yolov8s.onnx', help='Path to ONNX model')

parser.add_argument('--source', type=str, default=str('test.mp4'), help='Path to input image')

parser.add_argument('--imgsz', type=tuple, default=(640, 640), help='Image input size')

parser.add_argument('--conf', type=float, default=0.25, help='Confidence threshold')

parser.add_argument('--iou', type=float, default=0.45, help='NMS IoU threshold')

parser.add_argument('--infer_tool', type=str, default='openvino', choices=("openvino", "onnxruntime"), help='选择推理引擎')

parser.add_argument('--is_track', type=bool, default=True, help='是否启用跟踪')

parser.add_argument('--track_thresh', type=float, default=0.5, help='tracking confidence threshold')

parser.add_argument('--track_buffer', type=int, default=30, help='the frames for keep lost tracks, usually as same with FPS')

parser.add_argument('--match_thresh', type=float, default=0.8, help='matching threshold for tracking')

parser.add_argument('--min_box_area', type=float, default=10, help='filter out tiny boxes',)

parser.add_argument('--mot20', dest='mot20', default=False, action='store_true', help='test mot20.',)

args = parser.parse_args()

# Build model

model = YOLOv8(args.model, args.imgsz, args.infer_tool)

bytetrack = ByteTrackerONNX(args)

# 读取视频,解析帧数宽高,保存视频

cap = cv2.VideoCapture(args.source)

width = cap.get(cv2.CAP_PROP_FRAME_WIDTH)

height = cap.get(cv2.CAP_PROP_FRAME_HEIGHT)

fps = cap.get(cv2.CAP_PROP_FPS)

frame_count = cap.get(cv2.CAP_PROP_FRAME_COUNT)

video_writer = cv2.VideoWriter('demo.mp4', cv2.VideoWriter_fourcc(*"mp4v"), fps, (int(width), int(height)))

frame_id = 1

while True:

start_time = time.time()

ret, img = cap.read()

if not ret:

break

# Inference

boxes = model(img, conf_threshold=args.conf, iou_threshold=args.iou)

# track

if args.is_track:

boxes = bytetrack.inference(img, boxes)

# Visualize

if len(boxes) > 0:

model.draw_and_visualize(copy.deepcopy(img), boxes, video_writer, vis=False, save=True, is_track=args.is_track)

end_time = time.time() - start_time

print('frame {}/{} (Total time: {:.2f} ms)'.format(frame_id, int(frame_count), end_time * 1000))

frame_id += 1

结果显示如下:

具体时间消耗:

预处理时间:0.005s(包含Pad)

推理时间:0.09~0.10s

后处理时间:0.001s

ByteTrack时间:0.001~0.002s

注:640×640下,Openvino和ONNXRuntime推理速度相差不大,1280×1280下,Openvino速度更快。

lap+cython-bbox安装